In today’s tech-driven world, AI voice assistants have become ubiquitous. Whether it’s Siri helping you set a reminder, Alexa playing your favorite song, or Google Assistant answering a trivia question, these digital companions have seamlessly integrated into our lives. But have you ever wondered how these systems understand your voice, interpret your commands, and respond with uncanny accuracy? The magic behind AI speech technology lies in a sophisticated blend of technologies: Natural Language Processing (NLP), Machine Learning (ML), and speech recognition technology. In this blog, we’ll take a deep dive into how these components work together to create the responsive, intelligent assistants we rely on daily.

This exploration will cover the fundamentals of each technology, how they interact, the role of data, the challenges involved, and where this technology is headed. By the end, you’ll have a clear picture of the intricate machinery powering your voice-activated devices.

What Are AI Voice Assistants?

Before diving into the technical details, let’s define what AI voice assistants are. An AI voice assistant is a software program that uses artificial intelligence to understand spoken language, process requests, and perform tasks or provide responses. Examples include Amazon’s Alexa, Apple’s Siri, Google Assistant, and Microsoft’s Cortana. These assistants can schedule appointments, control smart home devices, send messages, provide weather updates, and even engage in casual conversation.

At their core, AI voice assistants rely on three pillars:

- speech recognition technology: Converting your spoken words into text.

- Natural Language Processing (NLP): Understanding the meaning behind that text.

- Machine Learning (ML): Enabling the system to learn, improve, and adapt over time.

Together, these technologies transform sound waves into actionable insights. Let’s break them down one by one.

Speech Recognition: Turning Sound into Words

The journey of an AI voice assistant begins when you speak. Your voice—a complex wave of sound—must first be converted into something the system can understand. This is where speech recognition comes in.

How speech recognition technology Works?

speech recognition technology, also known as Automatic Speech Recognition (ASR), is the process of converting spoken language into text. Here’s how it happens:

- Audio Input: When you say, “Hey Siri, what’s the weather like?” your voice is captured by a microphone as an analog signal.

- Signal Processing: The analog signal is digitized—broken down into a series of numerical values representing the sound wave’s amplitude over time. Noise reduction techniques filter out background sounds (like a barking dog or traffic) to focus on your voice.

- Feature Extraction: The system analyzes the digitized audio to identify key characteristics, such as pitch, frequency, and timing. These features are distilled into a compact form called a “feature vector.”

- Acoustic Modeling: The feature vectors are matched against an acoustic model—a statistical representation of how different sounds (phonemes) are pronounced. For instance, the model knows what the “w” sound in “weather” typically looks like.

- Language Modeling: To refine the output, a language model predicts the most likely sequence of words based on grammar, context, and vocabulary. For example, it knows “weather” is more likely than “whether” in this context.

- Text Output: The system produces a text transcription of your speech, such as “What’s the weather like?”

The Role of machine learning in voice assistants

Modern speech recognition systems rely heavily on machine learning, particularly deep learning. Traditional ASR systems used rule-based methods, but today’s assistants use neural networks trained on massive datasets of spoken language. These datasets include diverse accents, dialects, and speaking styles to ensure robustness.

For example, a Recurrent Neural Network (RNN) or a Transformer model might analyze the temporal patterns in your speech, while a Convolutional Neural Network (CNN) extracts spatial features from the audio signal. The result? A system that can understand you even if you mumble, have an accent, or speak in a noisy environment.

Challenges in Speech Recognition

Despite its advancements, speech recognition isn’t perfect. Background noise, overlapping voices, and unusual pronunciations can trip it up. Additionally, homophones (words that sound the same but have different meanings, like “to” and “two”) require context to disambiguate—a task that bridges into NLP.

Natural Language Processing: Understanding the Meaning

Once your speech is converted into text, the assistant needs to figure out what you mean. This is where Natural Language Processing (NLP) takes center stage.

What is NLP?

NLP is a branch of AI that enables computers to understand, interpret, and generate human language. For a NLP in voice assistants has two key steps:

- Natural Language Understanding (NLU): Deciphering the intent and extracting relevant information from your command.

- Natural Language Generation (NLG): Crafting a coherent, human-like response.

How Voice Assistants works in NPL?

Let’s revisit our example: “What’s the weather like?” Here’s how NLP processes it:

- Tokenization: The sentence is broken into individual words or “tokens”: “What’s,” “the,” “weather,” “like.”

- Part-of-Speech Tagging: Each token is labeled with its grammatical role (e.g., “weather” as a noun, “like” as a preposition).

- Named Entity Recognition (NER): The system identifies entities like locations or dates. If you’d said, “What’s the weather like in New York?” it would tag “New York” as a place.

- Intent Recognition: The assistant determines your goal. In this case, the intent is “get weather information.”

- Slot Filling: The system extracts details needed to fulfill the intent. Here, “weather” is the subject, and the location might default to your current position unless specified.

- Response Generation: Using NLG, the assistant constructs a reply, like “The weather is sunny with a high of 72°F.”

Machine Learning in NLP

NLP relies on machine learning models like BERT (Bidirectional Encoder Representations from Transformers) or GPT (Generative Pre-trained Transformer). These models are pre-trained on vast corpora of text—think billions of sentences from books, websites, and conversations. They learn patterns in language, such as syntax, semantics, and context.

For instance, BERT excels at understanding context by looking at words before and after a given term. If you say, “Set an alarm,” it knows you mean a time-based alert, not a fire alarm, based on typical usage patterns.

Challenges in NLP

NLP faces hurdles like ambiguity (“Can you make it quick?” could mean speed or a recipe), sarcasm, and cultural nuances. Multilingual support adds complexity—training a model to handle English, Mandarin, and Arabic simultaneously is no small feat.

Machine Learning: The Brain Behind the Operation

Speech recognition and NLP wouldn’t be possible without Machine Learning (ML), the backbone of AI voice assistants. ML enables these systems to learn from data, improve over time, and adapt to individual users.

How ML Powers Voice Assistants?

ML involves training algorithms on data to make predictions or decisions. In voice assistants, it’s applied at every stage:

- Training Phase: Developers feed the system labeled data—audio recordings paired with transcriptions for speech recognition, or text with annotated intents for NLP. Neural networks adjust their internal parameters to minimize errors.

- Inference Phase: Once trained, the model processes new inputs (your voice) in real time, predicting the most likely transcription or intent.

- Continuous Learning: Many assistants refine their models based on user interactions. If you correct Alexa’s misunderstanding, it might tweak its weights to avoid the same mistake.

Types of ML in Voice Assistants

- Supervised Learning: Used to train speech recognition (audio-to-text mappings) and intent recognition (text-to-action mappings).

- Unsupervised Learning: Helps cluster similar phrases or detect patterns in unlabeled data, like grouping accents.

- Reinforcement Learning: Optimizes responses by rewarding the assistant for successful outcomes (e.g., completing a task correctly).

Personalization Through ML

ML also enables personalization. Over time, your assistant learns your preferences—say, your favorite music genre or frequent contacts. This is often done via user-specific fine-tuning, where the global model adapts to your unique speech patterns and habits.

Challenges in ML

ML models require vast amounts of data, raising privacy concerns. They can also inherit biases from training data (e.g., struggling with underrepresented accents) and need regular updates to stay relevant.

The Workflow: Putting It All Together

Now that we’ve explored the components, let’s see how they collaborate in a typical interaction:

- Wake Word Detection: You say “Hey Google,” triggering the assistant. This step uses a lightweight ML model to listen for specific phrases without processing everything you say.

- Speech Recognition: Your command (“What’s the time?”) is converted to text.

- NLP Analysis: The intent (“get time”) is identified, and any parameters (current location) are extracted.

- Task Execution: The assistant fetches the time from an internal clock or API.

- Response Delivery: NLG crafts a reply (“It’s 3:45 PM”), and a Text-to-Speech (TTS) system converts it back to spoken words.

This process happens in milliseconds, thanks to cloud computing and optimized algorithms.

Supporting Technologies

Beyond the core trio, other technologies enhance voice assistants:

- Text-to-Speech (TTS): Converts text responses into natural-sounding speech using neural vocoders like WaveNet.

- Cloud Computing: Offloads heavy processing to remote servers, enabling real-time performance.

- APIs and Integrations: Connects the assistant to external services (e.g., weather APIs or smart home devices).

- Wake Word Engines: Low-power models that run locally to detect phrases like “Alexa.”

Evolution of Voice Assistants

The journey of voice assistants reflects decades of technological leaps, transforming them from basic tools to sophisticated companions.

The Early Days

The story begins in 1961 with IBM’s Shoebox, a rudimentary device that recognized spoken digits and a handful of commands. Limited by hardware and rule-based programming, it was a proof of concept rather than a practical tool. The 1980s and 1990s brought incremental progress—systems like Dragon NaturallySpeaking introduced speech-to-text for dictation, but they struggled with accuracy and required painstaking user training.

The Breakthrough in the 2010s

The 2010s ushered in a revolution. Apple launched Siri in 2011 as part of the iPhone 4S, marking the debut of a consumer-friendly voice assistant. Siri could handle basic tasks like sending texts or checking the weather, powered by early machine learning techniques. Google followed with Google Now, emphasizing predictive assistance, while Amazon’s Alexa, introduced in 2014 with the Echo, redefined the category by integrating with smart homes. These advancements were fueled by two game-changers: deep learning, which improved speech and language models, and big data, providing the vast datasets needed to train them.

Modern Advancements

Today’s voice assistants are conversational powerhouses. They’re context-aware—Google Assistant can follow up “Who’s the president?” with “How old is he?” without losing track. Multilingual support has expanded, with systems like Alexa handling dozens of languages. Innovations like Transformer models (the backbone of BERT and GPT) have sharpened NLP, while edge computing—processing on-device rather than in the cloud—boosts speed and privacy. From standalone devices to car integrations, voice assistants have evolved into versatile, indispensable tools.

Challenges and Ethical Considerations

Despite their sophistication, voice assistants grapple with technical and ethical hurdles that shape their development and use.

Technical Challenges

- Accuracy: Misinterpretations—like hearing “call Mom” as “call Tom”—can disrupt user trust, especially in critical tasks like medication reminders.

- Environmental Factors: Noisy settings or thick accents challenge speech recognition, requiring robust noise-cancellation and diverse training data.

- Complexity: Handling nuanced requests (“Play something I’d like”) demands advanced context and user profiling.

Ethical Dilemmas

- Privacy: Always-listening devices record snippets of your life, stored on company servers. High-profile cases—like accidental activations shared publicly—fuel concerns about surveillance and data misuse.

- Bias: Models trained on skewed datasets may struggle with minority accents or languages, excluding certain users from full functionality.

- Security: Voice spoofing risks are real—hackers could mimic your voice to unlock doors or access accounts, highlighting the need for biometric safeguards.

Path to Ethical Design

Solutions include transparent data policies (e.g., opt-in recording), robust encryption, and inclusive datasets reflecting global diversity. Companies must balance innovation with accountability, ensuring users feel secure and respected.

The Future of AI Voice Assistants

The next chapter for voice assistants promises deeper integration and intelligence, reshaping how we interact with technology.

Contextual Awareness

Imagine an assistant that remembers your last question. Ask “What’s the capital of Brazil?” then “How big is it?”—future systems will seamlessly link the two, offering richer, multi-turn conversations without repetition.

Emotion Detection

By analyzing vocal tone and pace, assistants could adapt responses—offering comfort if you sound upset or brevity if you’re hurried. This emotional intelligence could transform them into empathetic companions.

Offline Capabilities

On-device processing will expand, reducing reliance on cloud servers. Picture an assistant that works flawlessly in a subway tunnel or remote area, enhancing privacy and speed by keeping data local.

Multimodal Interaction

Voice will merge with other inputs—say “Turn off the lights” while pointing at them, or see weather visuals on a screen alongside a spoken forecast. This fusion will make interactions more intuitive and versatile.

Broader Applications

Beyond homes, voice assistants will thrive in cars (navigation, diagnostics), healthcare (patient monitoring), and education (interactive tutoring), evolving from tools to proactive partners.

Conclusion

AI voice assistants are a testament to human ingenuity, weaving speech recognition, NLP, and machine learning in voice assistants into a fluid, responsive experience. From the moment you speak to the delivery of a clever reply, intricate algorithms and massive datasets work in harmony. Their evolution—from IBM’s Shoebox to today’s context-savvy systems—shows a relentless push toward smarter, more human-like interaction.

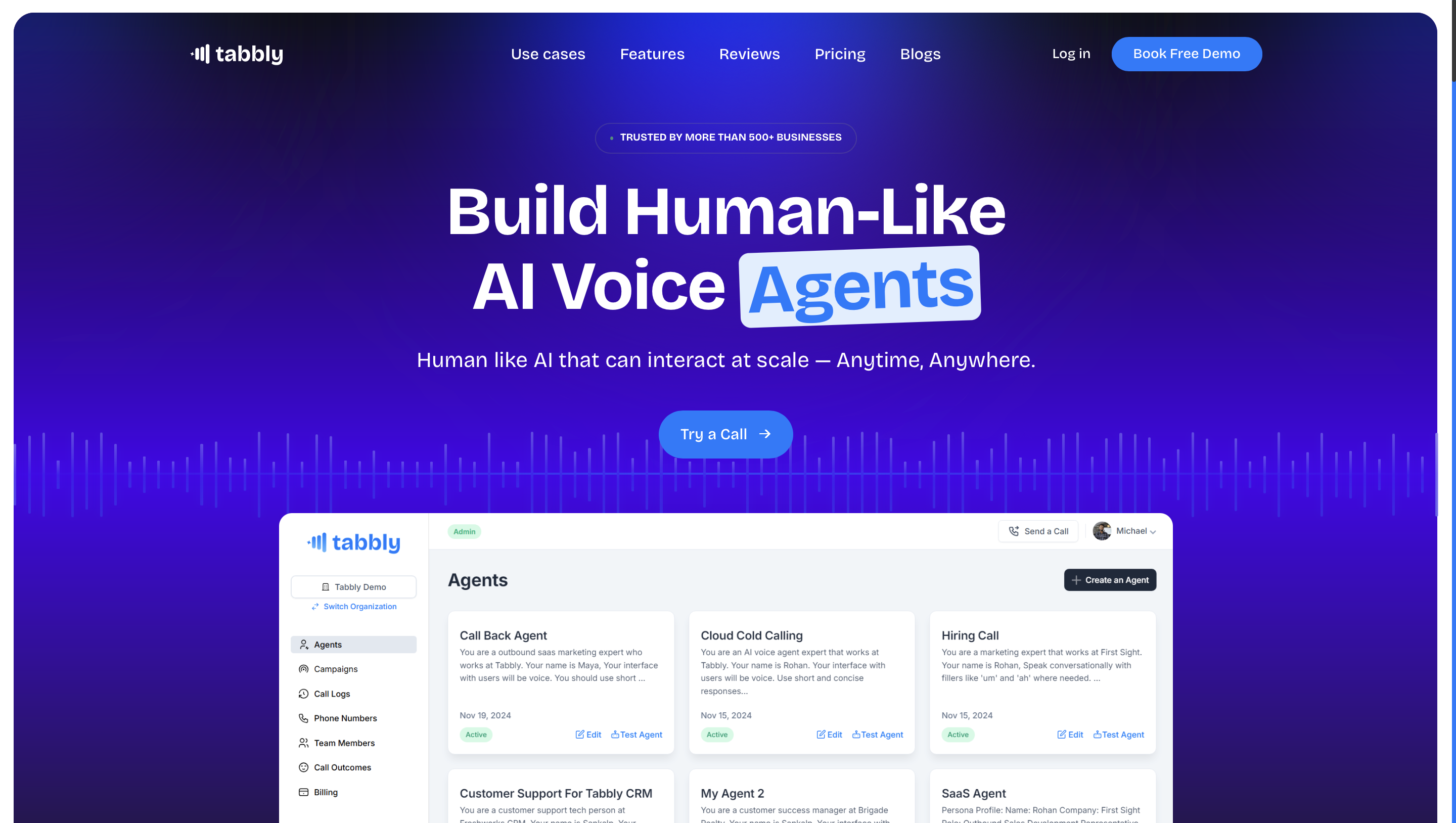

Yet, challenges like privacy and bias remind us that progress must be mindful. As they advance, incorporating features like emotion detection and offline prowess, voice assistants will deepen our connection to technology, making life more convenient and engaging. Innovations like the Tabbly.io AI Voice Agent exemplify this trend, offering cutting-edge solutions that blend seamless voice interaction with practical applications, hinting at the boundless potential ahead.

Next time you call out to your assistant, pause to appreciate the symphony of tech at play. It’s more than a voice—it’s a bridge to an AI-driven future.

1. What are AI voice assistants?

AI voice assistants are software programs that use natural language processing (NLP), machine learning, and speech recognition technology to understand and respond to voice commands. Examples include Siri, Alexa, and Google Assistant.

2. How do AI voice assistants work?

AI voice assistants work by processing spoken language through speech recognition technology, interpreting the meaning using NLP, and generating appropriate responses using machine learning algorithms.

3. What are the most popular AI voice assistants?

Some of the most widely used AI voice assistants include Siri (Apple), Alexa (Amazon), Google Assistant, and Microsoft Cortana.

4. Can AI voice assistants understand multiple languages?

Yes, modern AI voice assistants like Google Assistant, Alexa, and Siri support multiple languages and even regional dialects using advanced NLP models.

5. How does machine learning improve AI voice assistants?

Machine learning allows AI voice assistants to learn from user interactions, improve speech recognition accuracy, and provide more relevant responses over time.

6. What is NLP in AI voice assistants?

NLP (Natural Language Processing) is a branch of AI that enables voice assistants to understand human speech, detect intent, and generate meaningful responses.

7. Are AI voice assistants always listening?

Most AI voice assistants activate only after hearing a wake word (e.g., “Hey Siri” or “Alexa”). However, they do process background noise to detect these wake words, raising privacy concerns.

8. How secure is my data with AI voice assistants?

Security depends on the platform. Companies like Apple, Google, and Amazon encrypt voice data, but some concerns remain regarding data storage and third-party access.

9. Can AI voice assistants be integrated into smart home devices?

Yes, AI voice assistants like Alexa, Google Assistant, and Siri can control smart home devices such as lights, thermostats, and security cameras.

10. What is Tabbly.io, and does it use AI voice assistants?

Tabbly.io is a platform that leverages AI technologies, potentially including NLP and machine learning, to enhance automation, data processing, and digital interactions.